“Hard times are coming,” declared author Ursula K. Le Guin in a short but rousing speech after receiving the National Book Foundation’s Medal for Distinguished Contribution to American Letters. Those challenges, she explained, made it essential to have writers “who can see alternatives to how we live now, can see through our fear-stricken society and its obsessive technologies to other ways of being, and even imagine real grounds for hope.” It also meant knowing “the difference between production of a market commodity and the practice of an art.”

Le Guin delivered her remarks in 2014, just a few years before her death. But when I returned to them a few days ago, I was struck by how much they continue to resonate a decade later. As we find ourselves in another period of AI hype where writers and artists have been in the crosshairs more than other professions, Le Guin’s wisdom is worth returning to as a way to reinforce our critical — and even oppositional — stance toward these technologies and the corporations behind them. They’ll never be a source of emancipation.

Misleading the public

I don’t want to get too literal here, but the hard times Le Guin talked about have only become more acute for many people in our societies. We’re still dealing with the effects of the pandemic, by which I mean not just the rising cost of living and continued housing pressures, but also the mortality and long-term health effects from the virus itself. And that’s not even to mention the climate chaos ravaging the world.

Instead of responding to those challenges, the tech industry has decided their next big thing is AI. They want us to believe they’re focused on real issues, but are really hyping up investors to keep the money flowing at a moment of rising interest rates. Generative AI is unlikely to deliver on the wild fantasies of people like OpenAI CEO Sam Altman or venture capitalist Marc Andreessen. They thrive on misleading people for profit, and they have quite a bit of experience at it by now.

Yet that doesn’t mean there isn’t an impact. Since the AI hype took off, there’s been an ongoing debate about what we should expect machines to do for us. Tech determinists want us to believe that new technologies are inevitable and we can’t possibly shape or stop them; the best we can do is prepare for what’s in store. But I think most people realize that’s self-serving industry propaganda from people who want to keep deciding which technologies should be developed and how they should be deployed instead of relinquishing that power to the public.

The past eight months have given us a perfect example of why we need to heed Le Guin’s words and how corporate players are using new technologies to ensure we don’t. As economic conditions turned against them, executives seemed to become even more ruthless to the degree that the benevolent mask carefully constructed by highly paid public relations teams slipped, exposing more clearly the corporate greed that has always underpinned the tech and entertainment industries.

Understanding generative AI

Regardless of what companies and investors may say, artificial intelligence is not actually intelligent in the way most humans would understand it. To generate words and images, AI tools are trained on large databases of training data that is often scraped off the open web in unimaginably large quantities, no matter who owns it or what biases come along with it.

When a user then prompts ChatGPT or DALL-E to spit out some text or visuals, the tools aren’t thinking about the best way to represent those prompts because they don’t have that ability. They’re comparing the terms they’re presented with the patterns they formed from all the data that was ingested to train their models, then trying to assemble elements from that data to reflect what the user is looking for. In short, you can think of it like a more advanced form of autocorrect on your phone’s keyboard, predicting what you might want to say next based on what you’ve already written and typed out in the past.

If it’s not clear, that means these systems don’t create; they plagiarize. Unlike a human artist, they can’t develop a new artistic style or literary genre. They can only take what already exists and put elements of it together in a way that responds to the prompts they’re given. There’s good reason to be concerned about what that will mean for the art we consume, and the richness of the human experience.

The controlling power of tech

Since the benefits of art can be hard to quantify, it often gets undervalued by the bean counters whose only goal is to ensure the line keeps going up at any cost. That has the added benefit of blunting the potentially oppositional role it can play by ensuring resources are directed to spectacle instead of more challenging forms of creativity. Generative AI will only strengthen that impulse.

AI tools will not eliminate human artists, regardless of what corporate executives might hope. But it will allow companies to churn out passable slop to serve up to audiences at a lower cost. In that way, it allows a further deskilling of art and devaluing of artists because instead of needing a human at the center of the creative process, companies can try to get computers to churn out something good enough, then bring in a human with no creative control and a lower fee to fix it up. As actor Keanu Reeves put it to Wired earlier this year, “there’s a corporatocracy behind [AI] that’s looking to control those things. … The people who are paying you for your art would rather not pay you. They’re actively seeking a way around you, because artists are tricky.”

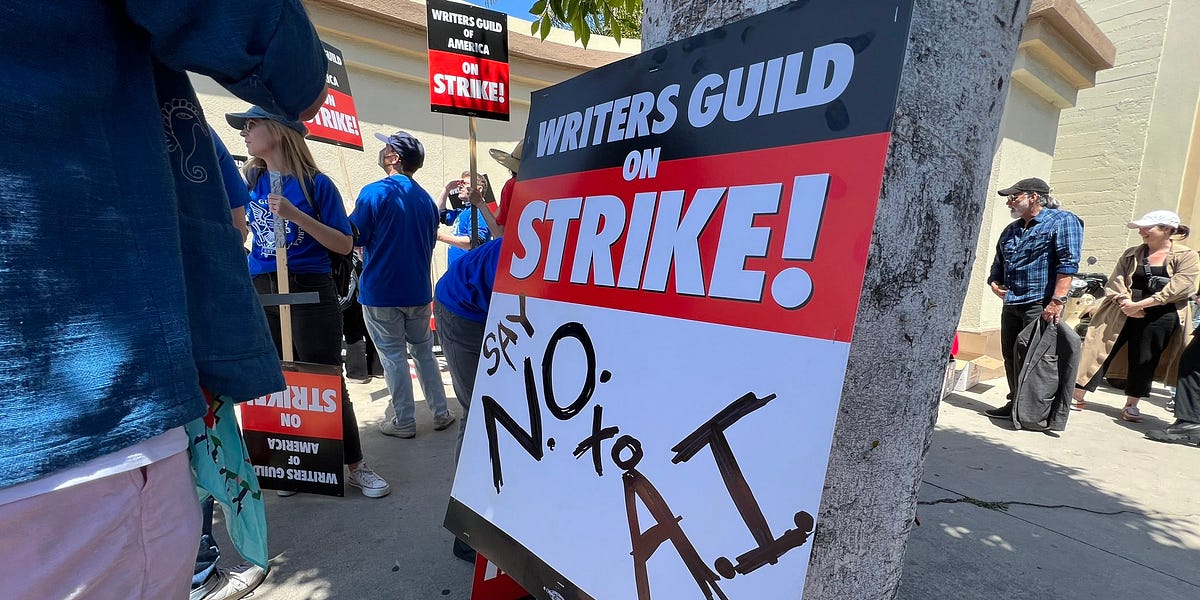

To some degree, this is already happening. Actors and writers in Hollywood are on strike together for the first time in decades. That’s happening not just because of AI, but how the movie studios and steaming companies took advantage of the shift to digital technologies to completely remake the business model so workers would be paid less and have less creative input. Companies have already been using AI tools to assess scripts, and that’s one example of how further consolidation paired with new technologies are leading companies to prioritize “content” over art. The actors and writers worry that if they don’t fight now, those trends will continue — and that won’t just be bad for them, but for the rest of us too.

Protecting the status quo

To consider why that’s important, let’s bring Le Guin back into this. In 2014, she asserted how important it was to have people who can imagine freedom and real alternatives to what exists today; people who can mentally break out of the increasingly confined set of possibilities we’re told to believe are realistic in the hope of building a better world. The effort to usher in more AI for art is a corporate attempt to avoid that from ever happening.

ChatGPT cannot imagine freedom or alternatives; it can only present you with plagiarized mash-ups of the data it’s been trained on. So, if generative AI tools begin to form the foundation of creative works and even more of the other writing and visualizing we do, it will further narrow the possibilities on offer to us. Just as previous waves of digital tech were used to deskill workers and defang smaller competitors, the adoption of even more AI tools has the side effect of further disempowering workers and giving management even further control over our cultural stories.

As Le Guin continued her speech, she touched on this very point. “The profit motive is often in conflict with the aims of art,” she explained. “We live in capitalism, its power seems inescapable — but then, so did the divine right of kings. Any human power can be resisted and changed by human beings. Resistance and change often begin in art. Very often in our art, the art of words.” That’s exactly why billionaires in the tech industry and beyond are so interested in further curtailing how our words can be used to help fuel that resistance, which would inevitably place them in the line of fire.

Defending humans from machines

The threat of AI can seem entirely novel because the tech industry is incentivized to have us forget the history of the technologies it needs to keep in a constant cycle of booms and busts. Back in the 1960s, Joseph Weizenbaum built the first chatbot, named ELIZA. But after seeing how people wanted to believe they were speaking to more than a rudimentary computer program, he became a critic of the tech he was once so excited by. As Ben Tarnoff recently outlined in The Guardian, Weizenbaum eventually used the concepts of judgment and calculation to determine which tasks should be never be open to computers.

In his view, a computer could be used to calculate since that’s purely a quantitative task, but it can never be used to judge a human. Judgment requires values, and for Weizenbaum, values are the product of human experience built up through the course of our lives. That’s something a computer, no matter how much processing power it’s equipped with, can never be trained to develop, and that means a computer should never be placed in a position where, for example, it’s making judgments about criminality, suitability for social supports, eligibility for a visa, and any number of other tasks where human values should be part of the equation.

I think most people would agree that’s also true of art. The stories and artworks that resonate with us are inspired by the life experiences of artists who made them. A computer can never capture a similar essence. Le Guin asserted that to face the challenging times ahead, we’ll need “writers who can remember freedom — poets, visionaries — realists of a larger reality.” Generative AI seems part of a wider plan by the most powerful people in the world to head that off, and to trap us in a world hurtling toward oblivion as long as they can hold onto their influence for a little longer.

As Le Guin said, creating art and producing commodities are two distinct acts. For companies, generative AI is a great way to produce even more cheap commodities to keep the cycle of capitalism going. It’s great for them, but horrible for us. It’s our responsibility to challenge the technology and the business model behind it, and to ensure we can still imagine a better tomorrow.

Member discussion