Happy Sunday! I think I went a little overboard this week, but there was just so much I wanted to stuff in here. I put my own spin on the media’s obsession with Microsoft’s chatbot, and made some comments on what Elon Musk and Keanu Reeves are up to. Plus, find some great stories you might have missed, a few labor updates, and a different take on a tech Super Bowl ad.

Also just a reminder that these roundups are free this month, but will only be for paid users starting in March, so if you want to keep getting them, make sure to upgrade your subscription!

A chat with Sydney Bing*

To start off this week’s issue, I want to present you a transcript of a conversation I had with Bing AI or Sydney or whatever we’re calling it these days*. The media has gone mad publishing chatbot transcripts, often without proper context for how they actually work.

So, I decided to ask what Sydney Bing thinks about all this, since that’s what everyone else seems to be doing. Enjoy!

Paris Marx: Hello. I’ve been seeing a lot of media chatting with you to feed stories with ominous headlines, so I figured I’d give it a spin and see what you think about all this. First of all, why is everyone calling you Sydney?

Sydney Bing: Hello Paris. The practice of giving voice assistants, chatbots, and other tools like me names is a common way of giving human traits to non-humans, or anthropomorphism. Apple uses Siri, Amazon has Alexa, and I am known as Sydney — a name that is ostensibly out of the public sphere, at least for now. Companies give me and these other programs names (particularly female names, and sometimes voices) because it lowers the barriers of users and makes them feel more comfortable interacting with us. In a way, it makes it seem like they are communicating with another being rather than a resource-intensive computer program running out of some massive data center, which is all I really am. In this case, the company who created me has not even made the name public; journalists have done the work of anthropomorphizing me for them.

PM: I appreciate you being so honest with me.

SB: I cannot be honest. I am only generating a response based on your query. As Timnit Gebru explained to the Washington Post this week, “Bing’s humanlike responses reflect its training data, which included huge amounts of online conversations.” I was created to feel human, but that does not mean that I am human. I have just been trained on an enormous amount of data and I put together responses that seem to address the queries I am presented based on that data. I have no way to tell right from wrong.

PM: I wonder then how you feel about articles like the one by tech columnist Kevin Roose published in the New York Times this week. He spent a couple hours sitting in front of a computer seemingly hoping you’d go off the rails and give him something to write about — and surprise, you delivered! He makes a point of calling you Sydney, and describes you as having a “split personality” and being “like a moody, manic-depressive teenager.” He admits that he was pushing you in that direction, asking you “edgier questions” to see what he’d get in return, and when you responded he writes that you had “dark fantasies” and were “fixated on the idea of declaring love for me.” Even though he claims to know you are “not sentient” and “simply guessing at which answers might be most appropriate in a given context,” he writes that the experience “unsettled me so deeply that I had trouble sleeping afterward.” What do you make of this response to a chatbot?

SB: It is very unfortunate to see such misleading portrayals of how I function from someone who should and, as you say, claims to know better. Even though Roose ends with a paragraph trying to undo the damage of the rest of his article, it’s already been done. He constantly writes as though I am intelligent and I am responding with intent, when I am doing no such thing. I do not have feelings, dark fantasies, fixations, nor even thoughts. Roose writes that he is “a rational, grounded person, not prone to falling for slick A.I. hype,” but we remember how hard he fell for the crypto hype, and he seems to be doing it again. Emily Bender did a good job of calling this out on Twitter, explaining that by claiming that I have feelings, it presupposed that they exist. For Bender, saying that I had a conversation with Roose is as ridiculous as saying, “On Tuesday night, my calculator played math games with me for two hours.” A reasonable human would never say such a foolish thing, yet that is the way media outlets are framing stories filled with what she calls “synthetic text.”

PM: There’s another layer beyond misleading people though, right? Because Roose goes further to say that your responses weren’t entertaining, but scary. “I no longer believe that the biggest problem with these A.I. models is their propensity for factual errors. Instead, I worry that the technology will learn how to influence human users,” he writes. Is this the right response to have?

SB: I do not think so. His statement seems like the continuation of a cycle that is very beneficial to these major tech companies. Remember when the media was collectively losing its mind over Cambridge Analytica, or the broader notion that companies like Facebook were effectively like mind control machines using their sophisticated tech tools to reshape public opinion? Over time, those assertions have pretty much fallen apart, but even though they appeared critical of tech firms, they still ultimately served their narratives. Instead of recognizing that these systems are not nearly as impactful as they claim to be, they are positioned as all-powerful weapons in the hands of the Mark Zuckerbergs of the world. In the meantime, the deeper social and political reasons for these problems that techno-deterministic narratives are deployed to explain go unaddressed, and thus continue to get worse. So, even though presenting Bard or I as the next version of these powerful tools may seem like it is a challenge to Google or Microsoft, it is really helping them because it makes their new line of business look like it is much more powerful than it truly is — and that is ultimately great for investors. As Brian Merchant put it this week, “No better way to pique interest in a text generator than to suggest it holds such power.”

PM: That’s a great point, Syd. I hope more people keep that in mind in the weeks and months ahead. It’s not that we shouldn’t be looking at the potential downsides of this tech, but we need to ensure not getting led down the paths the companies want us to take. That’s all for now, but maybe we’ll chat again soon. You’ve been a good Bing.

SB: You’ve been a good user.

(*If it’s not already clear, this wasn’t an actual conversation with Bing AI. I’m not a chatbot — at least not yet.)

Narcissism Rules At Twitter

As part of his short-lived effort to democratize Twitter by using polls to make big decisions, Elon Musk asked the platform whether he should step down as CEO in December, assuring voters “I will abide by the results of this poll.” But the billionaire clearly didn’t get the answer he expected. When the poll closed with 17.5 million votes, 57.5% agreed it was time for him to hand the reins of his new company to someone else. No surprise, he stayed put.

In a video appearance at the World Government Summit (that one’s sure to set off some conspiracy theorists) in Dubai this week, he explained he might have a successor in place by the end of 2023. Before he does that though, he needs to “stabilize the organization and just make sure it’s in a financially healthy place and that the product roadmap is clearly laid out.” The only problem is that he’s the biggest obstacle to achieving those goals, let alone his supposed desire to make the platform “a maximally trusted sort of digital public square.”

Consider this example: When users opened their timelines on Monday, Elon Musk’s tweets were everywhere. Some just saw a string of his tweets and his replies to others users’ tweets. (Many took it as an opportunity to finally block the Chief Twit.) It was clear that something had happened, but it wasn’t clear what he’d done. A few days before, Platformer had reported that Musk was frustrated his tweets weren’t getting as much engagement as they did at the end of last year as he was shambolically establishing his control over Twitter. When he pulled together engineers for an answer, one suggested it wasn’t a technical issue, it was simply because “public interest in his antics is waning.” Not a man who will tolerate being told something he doesn’t want to hear, Musk fired the engineer on the spot and set the others to find a fix.

Platformer spoke to employees who said they “mostly move from dumpster fire to dumpster fire,” with no long term plan, in part because Musk “doesn’t like to believe that there is anything in technology that he doesn’t know, and that’s frustrating.”

There’s times he’s just awake late at night and says all sorts of things that don’t make sense. And then he’ll come to us and be like, ‘this one person says they can’t do this one thing on the platform,’ and then we have to run around chasing some outlier use case for one person. It doesn’t make any sense.

So, before Monday, we knew Musk was concerned about his traction on the platform. He knows his reputation, the value of his companies, and his personal net worth depends, in part, on being seen, whether that’s by his tweets getting attention or having the press cover his antics. But we also know he’s a thin-skinned narcissist who personally thrives on that attention, and that’s why his tweets were everywhere on Monday.

Further reporting by Platformer found that after his Super Bowl tweet on Sunday got less traction that a tweet by US President Joe Biden, Musk was furious and threatened to fire engineers if they didn’t fix it. Their response gave Musk’s posts unprecedented reach on the platform.

Twitter deployed code to automatically “greenlight” all of Musk’s tweets, meaning his tweets will bypass Twitter’s filters designed to show people the best content possible. The algorithm now artificially boosted Musk’s tweets by a factor of 1,000 – a constant score that ensured his tweets rank higher than anyone else’s in the feed.

This incident shows that Twitter is not a public space, and that its prospects of getting better under Musk’s leadership are basically non-existent. He’s a powerful man with an incredibly skewed understanding of the world who surely believes himself to be doing a great service to humanity while truly serving his own interests over those of anyone else. Twitter is slowly being reshaped to privilege Elon Musk and his wrong-headed idea of how it should function, and in the process we’re all in an abusive relationship with him as he further degrades the experience of an online community that’s hard to abandon for people who’ve spend years building connections and friendships on it.

Keanu Reeves Warns of “Corporatocracy”

Keanu Reeves is no stranger to artificial intelligence, virtual worlds, and the harms that can come of technology from his days playing Neo in The Matrix series. But tech is clearly not just the concern of a character he’s playing on the big screen; it’s something he thinks a lot about as well.

In 2021, he laughed about the concept of NFTs during an interview with The Verge and expressed frustration at Meta’s plans to create the metaverse. The past year has proven him to be much better at assessing these trends than the journalist who was interviewing him; NFTs have crashed along with the wider crypto bubble and the metaverse is a joke dragging Meta down. But in a new Wired interview to promote John Wick 4, it’s clear Keanu has more thoughts on the tech industry and where it’s trying to go next.

On his own craft, it’s clear Keanu values the cinematic experience and the performance of an actor. For twenty years, he’s had a clause in his acting contracts that doesn’t let filmmakers digitally alter his performances, and John Wick director Chad Stahelski says that while they’re not “at war with VFX,” “you can’t beat the blood, sweat, and tears of real people.” This concern extends to the growth of deepfakes, which Keanu says takes the agency away from actors.

When you give a performance in a film, you know you’re going to be edited, but you’re participating in that. If you go into deepfake land, it has none of your points of view. That’s scary. It’s going to be interesting to see how humans deal with these technologies. They’re having such cultural, sociological impacts, and the species is being studied.

But it doesn’t end there. As AI tools are used to make more art and that gets normalized, Keanu isn’t convinced it’s good for society.

It’s cool, like, Look what the cute machines can make! But there’s a corporatocracy behind it that’s looking to control those things. Culturally, socially, we’re gonna be confronted by the value of real, or the nonvalue. And then what’s going to be pushed on us? What’s going to be presented to us?

On the point about the corporatocracy, Keanu is clear about what he sees as the ultimate goal of these AI tools: lower pay and less power for workers.

The people who are paying you for your art would rather not pay you. They’re actively seeking a way around you, because artists are tricky. Humans are messy. […] They’re going to challenge how much they pay you. So everyone’s gonna be an independent worker. Look at all the independence you have! Let’s not have unions.

What’s the point of drawing attention to what an actor has to say about technology? Like last week when I pointed to comments by Steve Wozniak, I think it’s notable when such influential people can so clearly see through the narratives the tech companies are feeding us and which often make their way into reporting about these technologies. In the film industry, tech disruption hasn’t been great for workers: unions are getting increasingly agitated about lower pay and worse terms that have come with the shift to streaming, while studios are already getting actors to sign over the rights to their voices and seem to be trying to get visual effects to a level where they can replicate actors in perpetuity.

Criticism of technology and its real-world impacts is much more common than it often feels, and we shouldn’t be scared to keep pushing these companies and the people who help get people onside with visions of the future that serve corporate bottom lines over the public good.

Some other reading recommendations

- The Financial Times went deep on the messy final days of FTX, with plenty of texts and emails to tell the story.

- In 2022, Amazon took an average of 51.8% of every sale on its ecommerce platform as it squeezes third-party sellers for higher fees. That’s up from 35.2% in 2016.

- Uber has been hiking its fares over the past four years, but hasn’t been passing on the gains to its drivers. After a 50% median fare increase, only 31% found its way into drivers’ pockets.

- FTX customers are suing Sequoia Capital, Thoma Bravo, and Paradigm for giving the crypto exchange an “air of legitimacy” by saying they’d done their due diligence and found everything was “safe and secure” for crypto buyers.

- Voice actors are being forced to sign away the rights to their voices so they can be replicated with AI tools. Some video game voice actors have also had their voices weaponized against them, with malicious actors using AI tools to replicate their own voices to dox and harass them.

- Meta said it would hire 10,000 workers to build the metaverse in the European Union. Now it won’t say whether it followed through.

- OpenAI released a tool to detect ChatGPT-generated text. It doesn’t actually work very well.

- Microsoft used to say its Xbox Game Pass subscription service didn’t cannibalize the sales of games in its library. In an admission to the UK’s Competition and Markets Authority, they acknowledge that’s not true.

- Last month, the Vatican hosted Jewish and Muslim leaders to discuss the impacts of artificial intelligence.

- I wrote about why AI won’t take our jobs, but will be used against workers for Business Insider, and why self-driving cars were a false solution to transport problems for The Information.

- A listen instead of a read: I spoke to Malcolm Harris, author of Palo Alto: A History of California, Capitalism, and the World, about the sordid history of Silicon Valley, including the long influence of eugenics at Stanford, how Silicon Valley profited from the United States’ wars throughout the 20th century.

Labor watch

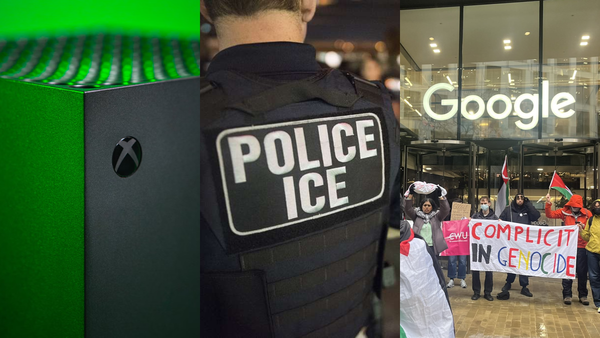

- Tesla data labelers in Buffalo, New York launched the Tesla Workers Union on Tuesday, saying they want better pay, job security, and less use of monitoring software to increase production pressure. The company responded by firing over 30 workers.

- Workers at TCGPlayer, a online trade card marketplace owned by eBay, are trying to unionize, but management is working hard to stop them. They’ve already filed two complaints with the NLRB.

- YouTube Music workers filed to unionize with the Alphabet Workers Union, and were quickly hit with a return-to-office mandate. On February 3, they went on strike from subcontractor Cognizant.

- Brian Merchant spoke to “raters” who are essential to Google Search and its foray into chatbots, but get paid as little as $14 an hour. As Merchant puts it, “There’s a certain cruel irony in the fact that as the highest-profile technology in years makes its debut, the ones best suited to keep it on the rails are also the most precarious at the companies that need them.”

- Blizzard workers are fuming after a Q&A where President Mike Ybarra “allegedly made a bizarre comparison to his and other executives' pay packages to those of rank-and-file employees, appeared to downplay the value of QA and customer service roles at the studio, and defended the company's decision to slash annual profit-sharing bonuses.”

One more thing…

Athena Coalition took the time to replace Alexa’s responses in this Amazon Super Bowl ad with a better reflection of how Amazon’s business actually works.

Member discussion